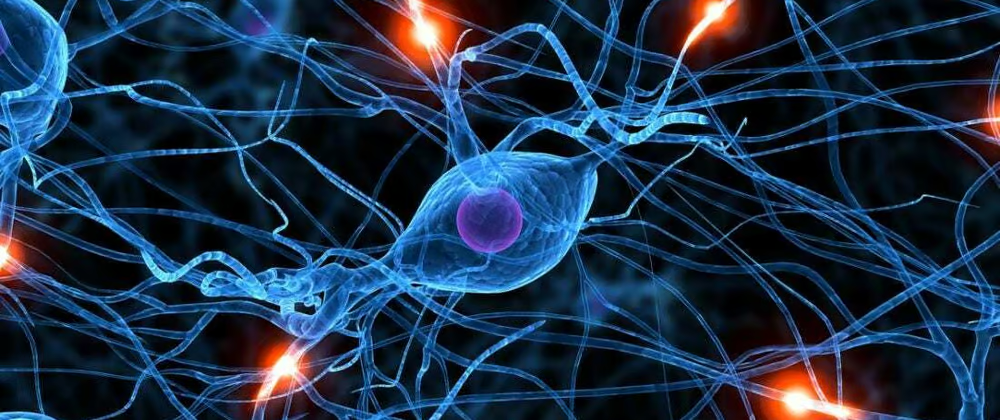

Neural networks, often regarded as the backbone of modern artificial intelligence (AI), are inspired by the human brain’s structure. These computational models aim to simulate the way the brain processes information, enabling machines to recognize patterns, make decisions, and even learn from experiences. But neural networks aren’t just about raw power—they’re about innovation, flexibility, and expanding what technology can achieve.

In this blog, we’ll dive into what neural networks are, how they function, their real-world applications, and why they are becoming increasingly important in the evolving field of AI.

What Are Neural Networks?

At their core, neural networks are systems designed to recognize patterns. They consist of layers of interconnected nodes, or “neurons,” which process information by mimicking how biological neurons in the brain communicate.

In a neural network, each layer of neurons takes in inputs, processes them through mathematical functions, and then passes the output to the next layer. This process continues through the network’s layers until a final output is produced. The learning in neural networks happens by adjusting the “weights” of these connections, which determines how strongly one neuron influences another. This adjustment is typically guided by algorithms like backpropagation and gradient descent.

Neural networks are typically structured into three main layers:

- Input Layer: This is the first layer, where raw data is fed into the system. For example, in image recognition, this could be the pixels of the image.

- Hidden Layers: These intermediate layers do the heavy lifting of processing the data. More hidden layers generally indicate a deeper neural network.

- Output Layer: This final layer produces the result, such as the classification of an image (e.g., identifying if an image contains a cat or a dog).

How Neural Networks Learn: Training Process

The process by which neural networks learn is one of trial and error, much like how humans learn from mistakes. When a neural network is first initialized, it starts with random weights. These weights are gradually adjusted during training by feeding the network examples of input data and comparing its output to the correct answer.

This learning process happens over multiple “epochs,” where the network continuously refines its internal understanding of the data. Some of the key concepts in this learning phase include:

- Backpropagation: This is the process of adjusting the weights in the network after each error is calculated. It ensures that the network can learn from its mistakes and become more accurate over time.

- Gradient Descent: This is an optimization technique used to minimize errors by gradually adjusting the weights in a way that reduces the overall difference between the expected output and the network’s actual output.

- Loss Function: This measures how far the network’s predictions are from the actual results. By minimizing the loss, neural networks improve their accuracy.

Types of Neural Networks

Neural networks come in various forms, each specialized for different tasks. Some of the most common types include:

- Feedforward Neural Networks (FNNs): The simplest type of neural network, where information flows in one direction, from input to output. These networks are commonly used in basic classification tasks.

- Convolutional Neural Networks (CNNs): Designed for processing grid-like data structures such as images, CNNs are widely used in image recognition tasks. These networks contain convolutional layers that filter inputs for important features like edges, colors, and textures.

- Recurrent Neural Networks (RNNs): RNNs are ideal for sequential data because they maintain memory of previous inputs. They are frequently used in time-series prediction, speech recognition, and language modeling.

- Generative Adversarial Networks (GANs): GANs consist of two competing networks—a generator and a discriminator. The generator creates new data, while the discriminator evaluates its authenticity. This “adversarial” process helps produce highly realistic data, from images to video and even music.

Real-World Applications of Neural Networks

Neural networks have revolutionized several industries. Here are some of the most impactful applications:

- Image and Speech Recognition: Neural networks power tools like facial recognition in social media and voice assistants like Siri and Alexa. They allow computers to recognize and categorize objects and voices in a human-like manner.

- Natural Language Processing (NLP): NLP applications, such as chatbots and translation services, rely on neural networks to understand and generate human language. For example, Google’s search algorithms utilize neural networks to better comprehend user queries.

- Healthcare: In the medical field, neural networks are used to analyze medical images, predict patient outcomes, and even assist in drug discovery. For instance, AI models can help radiologists identify tumors more accurately than traditional methods.

- Autonomous Vehicles: Self-driving cars use neural networks to interpret sensor data, understand their environment, and make real-time decisions. These networks are essential for tasks like object detection, lane tracking, and collision avoidance.

- Financial Services: Banks and financial institutions apply neural networks for tasks such as fraud detection, stock market prediction, and credit scoring. These models help process vast amounts of transactional data, identifying anomalies that might indicate fraudulent activity.

The Future of Neural Networks

The future of neural networks is incredibly promising. As research continues to refine these models, we can expect even greater breakthroughs, particularly in areas like AI ethics, explainability, and hardware acceleration. Some of the exciting trends include:

- Deep Learning: While neural networks have made leaps in accuracy, the depth of the network (i.e., the number of hidden layers) has become critical. Deep learning models, which use deeper networks, are pushing boundaries in fields like autonomous driving, natural language understanding, and computer vision.

- Transfer Learning: In transfer learning, a neural network trained on one task can be repurposed for another, related task. This reduces the amount of data and time required to train models and opens the door to more versatile AI applications.

- Neural Networks and Hardware Innovations: Advances in hardware, such as GPUs and specialized chips like TPUs (Tensor Processing Units), are making it easier to train neural networks faster and with larger datasets. These developments allow for more complex models and more efficient AI systems.

Challenges in Neural Networks

While neural networks offer tremendous potential, they are not without their challenges:

- Data Requirements: Neural networks often require vast amounts of labeled data to perform well, which can be expensive and time-consuming to gather.

- Training Time and Resources: Large neural networks need significant computational power and time to train, limiting their accessibility to smaller organizations or individuals without specialized hardware.

- Interpretability: Neural networks are often criticized as “black boxes” due to their lack of transparency. Understanding why a model makes a certain prediction is still a challenge in AI research.

Conclusion

Neural networks have become a cornerstone of AI and machine learning, driving innovation across industries. From image recognition to autonomous vehicles, the capabilities of these networks are expanding at an unprecedented rate. While challenges remain, particularly in terms of transparency and resource requirements, the ongoing advancements in neural network research promise a future where AI can solve increasingly complex problems and transform the world as we know it.

As the field of AI evolves, so will neural networks, offering even more powerful tools to drive future technologies. For more in-depth information and technical details, you can refer to the full paper here.

0 Comments